Rhiannon Williams – MIT Technology Review –

A new system was able to capture exact words and phrases from the brain activity of someone listening to podcasts.

A noninvasive brain-computer interface capable of converting a person’s thoughts into words could one day help people who have lost the ability to speak as a result of injuries like strokes or conditions including ALS.

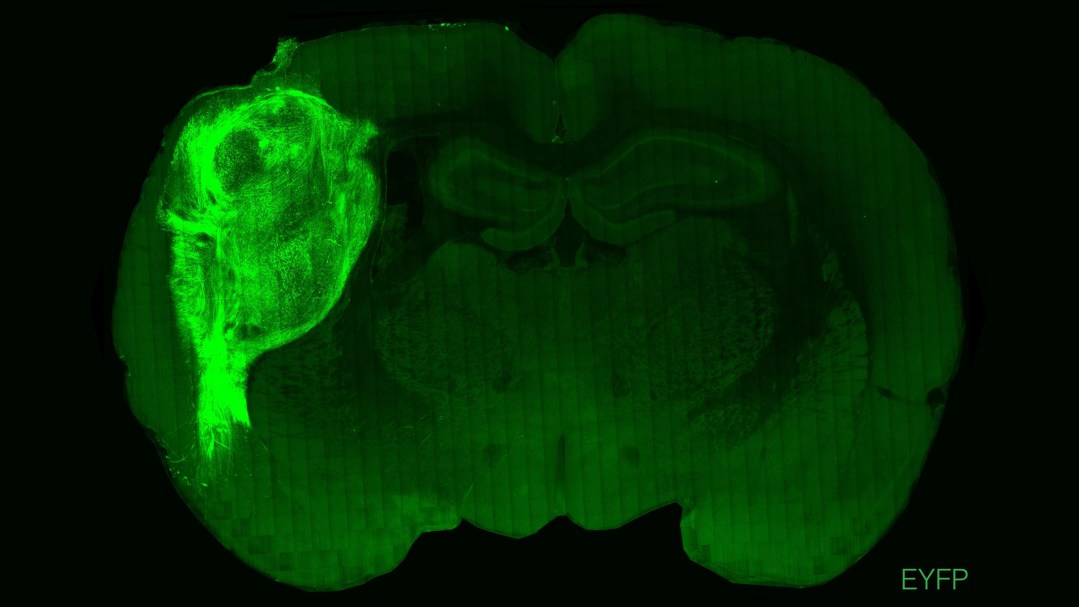

In a new study, published in Nature Neuroscience today, a model trained on functional magnetic resonance imaging scans of three volunteers was able to predict whole sentences they were hearing with surprising accuracy—just by looking at their brain activity. The findings demonstrate the need for future policies to protect our brain data, the team says.